New York Times Lawsuit Based On Misuse Of ChatGPT

- News

- January 9, 2024

- No Comment

- 57

[ad_1]

OpenAI printed a response to The New York Instances’ lawsuit by alleging that The NYTimes used manipulative prompting strategies with a purpose to induce ChatGPT to regurgitate prolonged excerpts, stating that the lawsuit relies on misuse of ChatGPT with a purpose to “cherry decide” examples for the lawsuit.

The New York Instances Lawsuit In opposition to OpenAI

The New York Instances filed a lawsuit towards OpenAI (and Microsoft) for copyright infringement alleging that ChatGPT “recites Instances content material verbatim” amongst different complaints.

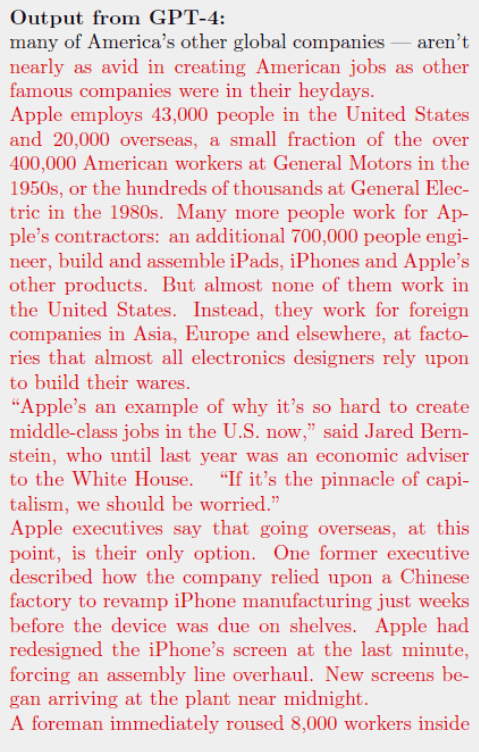

The lawsuit launched proof exhibiting how GPT-4 might output giant quantities of New York Instances content material with out attribution as proof that GPT-4 infringes on The New York Instances content material.

The accusation that GPT-4 is outputting actual copies of New York Instances content material is necessary as a result of it counters OpenAI’s insistence that its use of information is transformative, which is a authorized framework associated to the doctrine of truthful use.

The United States Copyright office defines the fair use of copyrighted content material that’s transformative:

“Honest use is a authorized doctrine that promotes freedom of expression by allowing the unlicensed use of copyright-protected works in sure circumstances.

…’transformative’ makes use of usually tend to be thought of truthful. Transformative makes use of are people who add one thing new, with an extra objective or totally different character, and don’t substitute for the unique use of the work.”

That’s why it’s necessary for The New York Instances to claim that OpenAI’s use of content material isn’t truthful use.

The New York Times lawsuit against OpenAI states:

“Defendants insist that their conduct is protected as “truthful use” as a result of their unlicensed use of copyrighted content material to coach GenAI fashions serves a brand new “transformative” objective. However there’s nothing “transformative” about utilizing The Instances’s content material …As a result of the outputs of Defendants’ GenAI fashions compete with and intently mimic the inputs used to coach them, copying Instances works for that objective isn’t truthful use.”

The next screenshot reveals proof of how GPT-4 outputs actual copy of the Instances’ content material. The content material in purple is unique content material created by the New York Instances that was output by GPT-4.

OpenAI Response Undermines NYTimes Lawsuit Claims

OpenAI provided a robust rebuttal of the claims made within the New York Instances lawsuit, claiming that the Instances’ determination to go to courtroom stunned OpenAI as a result of that they had assumed the negotiations have been progressing towards a decision.

Most significantly, OpenAI debunked The New York Instances claims that GPT-4 outputs verbatim content material by explaining that GPT-4 is designed to not output verbatim content material and that The New York Instances used prompting strategies particularly designed to interrupt GPT-4’s guardrails with a purpose to produce the disputed output, undermining The New York Instances’ implication that outputting verbatim content material is a standard GPT-4 output.

The sort of prompting that’s designed to interrupt ChatGPT with a purpose to generate undesired output is named Adversarial Prompting.

Adversarial Prompting Assaults

Generative AI is delicate to the varieties of prompts (requests) product of it and regardless of the perfect efforts of engineers to dam the misuse of generative AI there are nonetheless new methods of utilizing prompts to generate responses that get across the guardrails constructed into the know-how which might be designed to stop undesired output.

Methods for producing unintended output is known as Adversarial Prompting and that’s what OpenAI is accusing The New York Instances of doing with a purpose to manufacture a foundation of proving that GPT-4 use of copyrighted content material isn’t transformative.

OpenAI’s declare that The New York Instances misused GPT-4 is necessary as a result of it undermines the lawsuit’s insinuation that producing verbatim copyrighted content material is typical habits.

That type of adversarial prompting additionally violates OpenAI’s terms of use which states:

What You Can not Do

- Use our Companies in a means that infringes, misappropriates or violates anybody’s rights.

- Intervene with or disrupt our Companies, together with circumvent any price limits or restrictions or bypass any protecting measures or security mitigations we placed on our Companies.

OpenAI Claims Lawsuit Primarily based On Manipulated Prompts

OpenAI’s rebuttal claims that the New York Instances used manipulated prompts particularly designed to subvert GPT-4 guardrails with a purpose to generate verbatim content material.

OpenAI writes:

“It appears they deliberately manipulated prompts, usually together with prolonged excerpts of articles, with a purpose to get our mannequin to regurgitate.

Even when utilizing such prompts, our fashions don’t sometimes behave the best way The New York Instances insinuates, which suggests they both instructed the mannequin to regurgitate or cherry-picked their examples from many makes an attempt.”

OpenAI additionally fired again at The New York Instances lawsuit saying that the strategies utilized by The New York Instances to generate verbatim content material was a violation of allowed person exercise and misuse.

They write:

“Regardless of their claims, this misuse isn’t typical or allowed person exercise.”

OpenAI ended by stating that they proceed to construct resistance towards the sorts of adversarial immediate assaults utilized by The New York Instances.

They write:

“Regardless, we’re regularly making our techniques extra proof against adversarial assaults to regurgitate coaching knowledge, and have already made a lot progress in our latest fashions.”

OpenAI backed up their declare of diligence to respecting copyright by citing their response to July 2023 to stories that ChatGPT was producing verbatim responses.

We have discovered that ChatGPT’s “Browse” beta can sometimes show content material in methods we do not need, e.g. if a person particularly asks for a URL’s full textual content, it could inadvertently fulfill this request. We’re disabling Browse whereas we repair this—wish to do proper by content material homeowners.

— OpenAI (@OpenAI) July 4, 2023

The New York Instances Versus OpenAI

There’s at all times two sides of a narrative and OpenAI simply launched their aspect that reveals that The New York Instances claims are based mostly on adversarial assaults and a misuse of ChatGPT with a purpose to elicit verbatim responses.

Learn OpenAIs response:

OpenAI and journalism:

We help journalism, accomplice with information organizations, and consider The New York Instances lawsuit is with out benefit.

Featured Picture by Shutterstock/pizzastereo

[ad_2]

Source link