To block, or not to block • Yoast

- AppDigital MarketingNews

- October 26, 2023

- No Comment

- 117

[ad_1]

AI net crawlers like GPTbot, CCbot, and Google-Prolonged play a big position in coaching content material for AI fashions. These bots crawl web sites, acquire knowledge, and contribute to creating and enhancing Giant Language Fashions (LLMs) and synthetic intelligence. Nonetheless, many individuals have requested us the identical query: must you block these AI bots in your robots.txt file to guard your content material? This text delves into the professionals and cons of blocking AI robots and explores the implications.

Taming of the AI bots

This 12 months, there was a rising debate in our trade about whether or not to permit or block AI bots from accessing and indexing our content material. On the one hand, there are considerations about these bots’ potential misuse or unauthorized scraping of web site knowledge. Chances are you’ll fear about utilizing your mental property with out permission or the danger of compromised delicate knowledge. Blocking AI net crawlers generally is a protecting measure to safeguard content material and keep management over its utilization.

Then again, blocking these bots could have drawbacks. AI fashions rely closely on giant coaching knowledge volumes to make sure correct outcomes. By blocking these crawlers, you may restrict the provision of high quality coaching knowledge crucial for creating and enhancing AI fashions. Moreover, blocking particular bots could affect the visibility of internet sites in search outcomes, doubtlessly affecting discoverability. Plus, blocking AI could restrict your utilization of the instruments in your web site.

Examples of industries blocking bots

The world continues to be very new, as engines like google are solely starting to provide block choices. In response to the rising want for content material management, Google has introduced Google-Extended, an choice for publishers to dam the Bard bots from coaching on their content material actively.

This new growth comes after receiving suggestions from publishers expressing the significance of getting larger management over their content material. With Google-Prolonged, you’ll be able to determine whether or not your content material may be accessed and used for AI coaching. OpenAI (GPTbot) and Common Crawl (CCbot) are different important crawlers utilizing robots.txt choices. Microsoft Bing makes use of NOCACHE and NOARCHIVE meta tags to block Bing Chat from training on content.

Information publishers

It’s price noting that almost all main information web sites have taken a agency stance. Many publications block these crawlers to safeguard their journalistic work. In response to analysis by Palewire, 47% of the tracked information web sites already block AI bots. These respected institutions perceive the significance of defending their content material from unauthorized scraping and potential manipulation.

By blocking AI robots, they make sure the integrity of their reporting, sustaining their standing as trusted sources of data. Their collective choice to guard their work highlights the importance of content material preservation. The trade must discover a stability in granting entry to AI robots for coaching.

Ecommerce web sites

In ecommerce, one other important consideration arises for website house owners. On-line retailers with distinctive product descriptions and different product-related content material could strongly want to dam AI bots. These bots have the potential to scrape and replicate their rigorously crafted product descriptions. Product content material performs a significant position in attracting and interesting prospects.

Ecommerce websites make investments important effort in cultivating a particular model id and compellingly presenting their merchandise. Blocking AI bots is a proactive measure to safeguard their aggressive benefit, mental property, and total enterprise success. By preserving their distinctive content material, on-line shops can higher make sure the authenticity and exclusivity of their work.

Implications of (not) blocking AI coaching bots

Because the AI trade evolves and AI fashions turn into extra refined, you could take into account the implications of permitting or blocking AI bots. Figuring out the best strategy entails weighing the advantages of content material safety and knowledge safety in opposition to potential limitations in AI mannequin growth and visibility on the internet. We’ll discover some execs and cons of blocking AI bots and supply suggestions.

Professionals of blocking AI robots

Blocking AI bots from accessing content material could have its drawbacks, however there are potential advantages that it’s best to take into account:

Safety of mental property: You possibly can forestall unauthorized content material scraping by blocking AI bots like OpenAI’s GPTbot, CCbot, Google Bard, and others. This helps safeguard your mental property and ensures that your arduous work and distinctive creations are usually not utilized with out permission.

Server load optimization: Many robots are crawling your website, every including a load to the server. So, permitting bots like GPTbot and CCbot provides up. Blocking these bots can save server sources.

Content material management: Blocking AI bots provides you full management over your content material and its use. It permits you to dictate who can entry and use the content material. This helps align it along with your desired goal and context.

Safety from undesirable associations: AI may affiliate an internet site’s content material with deceptive or inappropriate info. Blocking these reduces the danger of such associations, permitting you to keep up the integrity and fame of your model.

When deciding what to do with these crawlers, you could rigorously weigh the benefits in opposition to the drawbacks. Evaluating your particular circumstances, content material, and priorities is crucial to make an knowledgeable choice. You’ll find an choice that aligns along with your distinctive wants and objectives by totally inspecting the professionals and cons.

Cons of blocking AI bots

Whereas blocking AI robots could provide specific benefits, it additionally presents potential drawbacks and concerns. It’s best to rigorously consider these implications earlier than doing this:

Limiting your self from utilizing AI fashions in your web site: You will need to deal with the positioning proprietor’s perspective and look at the way it could affect customers. One important facet is the potential affect on customers counting on AI bots like ChatGPT for private content material era. As an illustration, people who make the most of these to draft their posts could have particular necessities, similar to utilizing their distinctive tone of voice. Nonetheless, blocking AI robots could constrain their skill to supply the bot with their URLs or content material to generate drafts that carefully match their desired type. In such circumstances, the hindrance attributable to blocking the bot can considerably outweigh any considerations about coaching AI fashions that they could not use instantly.

Influence on AI mannequin coaching: AI fashions, like giant language fashions (LLMs), depend on huge coaching knowledge to enhance accuracy and capabilities. By blocking AI robots, you restrict the provision of precious knowledge that might contribute to creating and enhancing these fashions. This might hinder the progress and effectiveness of AI applied sciences.

Visibility and indexing: AI bots, notably these related to engines like google, could play a job in web site discoverability and visibility. Blocking these bots could affect a website’s visibility in search engine outcomes, doubtlessly leading to missed alternatives for publicity. For instance, take Google’s growth of the Search Generative Expertise. Though Google stated that blocking the Google-Prolonged crawler does not affect the content material within the SGE — simply Google Bard — which may change. So, in the event you block this, it would take your knowledge out of the pool of potential citations that Google makes use of to generate solutions and outcomes.

Limiting collaborative alternatives: Blocking AI robots may forestall potential collaborations with AI researchers or builders focused on utilizing knowledge for reputable functions. Collaborations with these stakeholders may result in precious insights, enhancements, or improvements in AI.

Unintentional blocking: Improperly configuring the robots.txt file to dam AI bots may inadvertently exclude reputable crawlers. This unintended consequence can hinder correct knowledge monitoring and evaluation, resulting in potential missed alternatives for optimization and enchancment.

When contemplating whether or not to dam AI robots, you could rigorously stability content material safety and management benefits with the drawbacks talked about. Evaluating the particular objectives, priorities, and necessities of your website and AI technique is crucial.

So, now what?

Deciding to dam or enable AI bots is a difficult choice. It helps in the event you take into account the next suggestions:

Assess particular wants and targets: Fastidiously consider your website and content material’s wants, targets, and considerations earlier than deciding. Think about elements similar to the kind of content material, its worth, and the potential dangers or advantages related to permitting or blocking AI bots.

Discover different options: As an alternative of blocking robots outright, take into account implementing different measures that stability content material safety and knowledge availability. For instance, charge limiting, user-agent restrictions, or implementing phrases of use or API entry limitations may help handle AI bot entry whereas nonetheless permitting precious knowledge to be utilized.

Commonly assessment and replace robots.txt: Constantly assessment your robots.txt file to make sure it aligns along with your present technique and circumstances. Commonly assess the effectiveness of the carried out measures and make changes as wanted to accommodate altering threats, objectives, or partnerships.

Keep knowledgeable: Hold up to date with trade pointers, greatest practices, and authorized laws relating to AI bots and net scraping. Familiarize your self with related insurance policies and guarantee compliance with relevant legal guidelines or laws.

Think about collaboration alternatives: Whereas blocking these could have advantages, you’ll be able to discover potential collaborations with AI researchers, organizations, or builders. Participating in partnerships can result in mutually helpful outcomes. You would change data, analysis insights, or different developments within the AI subject.

Search skilled recommendation: If you’re unsure about your web site’s greatest plan of action, take into account asking for assist. website positioning professionals, authorized consultants, or AI specialists may help primarily based in your wants and objectives.

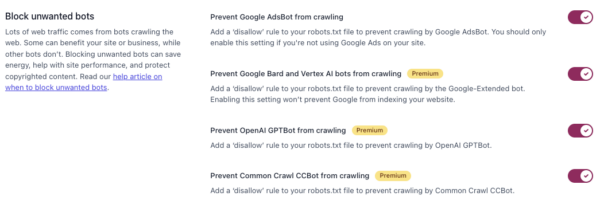

Blocking AI robots with Yoast website positioning Premium

Subsequent week, Yoast website positioning will introduce a handy characteristic that simplifies the method in response to the rising demand for controlling AI robots. With only a flick of a change, now you can simply block AI robots like GPTbot, CCbot, and Google-Prolonged. This automated performance seamlessly provides a selected line to the robots.txt file, successfully disallowing entry to those crawlers.

This streamlined answer empowers you to swiftly and effectively shield your content material from AI bots with out requiring guide configuration or complicated technical changes. Yoast SEO Premium provides you larger management over your content material and effortlessly manages your required crawler entry settings by offering a user-friendly choice.

Must you block AI robots?

The choice to dam or enable AI bots like GPTbot, CCbot, and Google-Prolonged within the robots.txt file is a posh one which requires cautious consideration. All through this text, we’ve got explored the professionals and cons of blocking these bots. We’ve mentioned numerous elements that it’s best to take into account.

On the one hand, blocking these robots can present benefits similar to safety of mental property, enhanced knowledge safety, and server load optimization. It provides management over your content material and privateness and preserves your model integrity.

Then again, blocking AI bots could restrict alternatives for AI mannequin coaching, affect website visibility, and indexing, and hinder potential collaborations with AI researchers and organizations. It requires a cautious stability between content material safety and knowledge availability.

You should assess your particular wants and targets to make an knowledgeable choice. You should definitely discover different options, keep up to date with trade pointers, and take into account looking for skilled recommendation when wanted. Commonly reviewing and adjusting the robots.txt file primarily based on modifications in technique or circumstances can also be essential.

Finally, blocking or permitting robots ought to align along with your distinctive objectives, priorities, and danger tolerance. It’s necessary to keep in mind that this choice isn’t a one-size-fits-all strategy. The optimum technique will fluctuate relying on particular person circumstances.

In conclusion, utilizing AI bots in web site indexing and coaching raises necessary concerns for website house owners. You’ll want to guage the implications and discover the best stability. If that’s the case, you’ll discover a answer that aligns along with your objectives, protects your content material, and contributes to synthetic intelligence’s accountable and moral growth.

[ad_2]

Source link