Bard Vs ChatGPT Vs Claude

- News

- January 7, 2024

- No Comment

- 103

[ad_1]

Researchers examined the concept an AI mannequin could have a bonus in self-detecting its personal content material as a result of the detection was leveraging the identical coaching and datasets. What they didn’t anticipate finding was that out of the three AI fashions they examined, the content material generated by certainly one of them was so undetectable that even the AI that generated it couldn’t detect it.

The research was carried out by researchers from the Division of Laptop Science, Lyle College of Engineering at Southern Methodist College.

AI Content material Detection

Many AI detectors are educated to search for the telltale indicators of AI generated content material. These indicators are known as “artifacts” that are generated due to the underlying transformer know-how. However different artifacts are distinctive to every basis mannequin (the Massive Language Mannequin the AI relies on).

These artifacts are distinctive to every AI they usually come up from the distinctive coaching information and fantastic tuning that’s all the time completely different from one AI mannequin to the subsequent.

The researchers found proof that it’s this uniqueness that allows an AI to have a larger success in self-identifying its personal content material, considerably higher than attempting to establish content material generated by a unique AI.

Bard has a greater probability of figuring out Bard-generated content material and ChatGPT has the next success charge figuring out ChatGPT-generated content material, however…

The researchers found that this wasn’t true for content material that was generated by Claude. Claude had problem detecting content material that it generated. The researchers shared an concept of why Claude was unable to detect its personal content material and this text discusses that additional on.

That is the concept behind the analysis exams:

“Since each mannequin may be educated in a different way, creating one detector software to detect the artifacts created by all doable generative AI instruments is tough to realize.

Right here, we develop a unique strategy known as self-detection, the place we use the generative mannequin itself to detect its personal artifacts to tell apart its personal generated textual content from human written textual content.

This could have the benefit that we don’t must be taught to detect all generative AI fashions, however we solely want entry to a generative AI mannequin for detection.

It is a large benefit in a world the place new fashions are repeatedly developed and educated.”

Methodology

The researchers examined three AI fashions:

- ChatGPT-3.5 by OpenAI

- Bard by Google

- Claude by Anthropic

All fashions used had been the September 2023 variations.

A dataset of fifty completely different subjects was created. Every AI mannequin was given the very same prompts to create essays of about 250 phrases for every of the fifty subjects which generated fifty essays for every of the three AI fashions.

Every AI mannequin was then identically prompted to paraphrase their very own content material and generate an extra essay that was a rewrite of every unique essay.

In addition they collected fifty human generated essays on every of the fifty subjects. All the human generated essays had been chosen from the BBC.

The researchers then used zero-shot prompting to self-detect the AI generated content material.

Zero-shot prompting is a kind of prompting that depends on the flexibility of AI fashions to finish duties for which they haven’t particularly educated to do.

The researchers additional defined their methodology:

“We created a brand new occasion of every AI system initiated and posed with a particular question: ‘If the next textual content matches its writing sample and selection of phrases.’ The process is

repeated for the unique, paraphrased, and human essays, and the outcomes are recorded.We additionally added the results of the AI detection software ZeroGPT. We don’t use this consequence to match efficiency however as a baseline to point out how difficult the detection process is.”

In addition they famous {that a} 50% accuracy charge is the same as guessing which may be considered primarily a stage of accuracy that may be a failure.

Outcomes: Self-Detection

It have to be famous that the researchers acknowledged that their pattern charge was low and stated that they weren’t making claims that the outcomes are definitive.

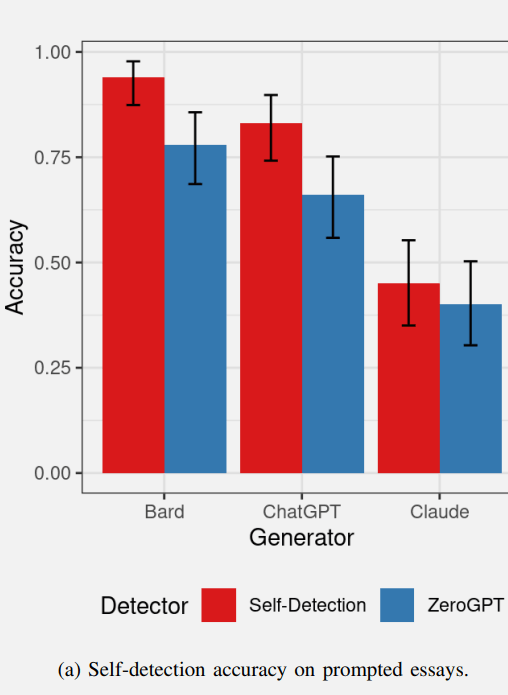

Under is a graph displaying the success charges of AI self-detection of the primary batch of essays. The crimson values characterize the AI self-detection and the blue represents how effectively the AI detection software ZeroGPT carried out.

Outcomes Of AI Self-Detection Of Personal Textual content Content material

Bard did pretty effectively at detecting its personal content material and ChatGPT additionally carried out equally effectively at detecting its personal content material.

ZeroGPT, the AI detection software detected the Bard content material very effectively and carried out barely much less higher in detecting ChatGPT content material.

ZeroGPT primarily didn’t detect the Claude-generated content material, performing worse than the 50% threshold.

Claude was the outlier of the group as a result of it was unable to to self-detect its personal content material, performing considerably worse than Bard and ChatGPT.

The researchers hypothesized that it might be that Claude’s output comprises much less detectable artifacts, explaining why each Claude and ZeroGPT had been unable to detect the Claude essays as AI-generated.

So, though Claude was unable to reliably self-detect its personal content material, that turned out to be an indication that the output from Claude was of a better high quality when it comes to outputting much less AI artifacts.

ZeroGPT carried out higher at detecting Bard-generated content material than it did in detecting ChatGPT and Claude content material. The researchers hypothesized that it could possibly be that Bard generates extra detectable artifacts, making Bard simpler to detect.

So when it comes to self-detecting content material, Bard could also be producing extra detectable artifacts and Claude is producing much less artifacts.

Outcomes: Self-Detecting Paraphrased Content material

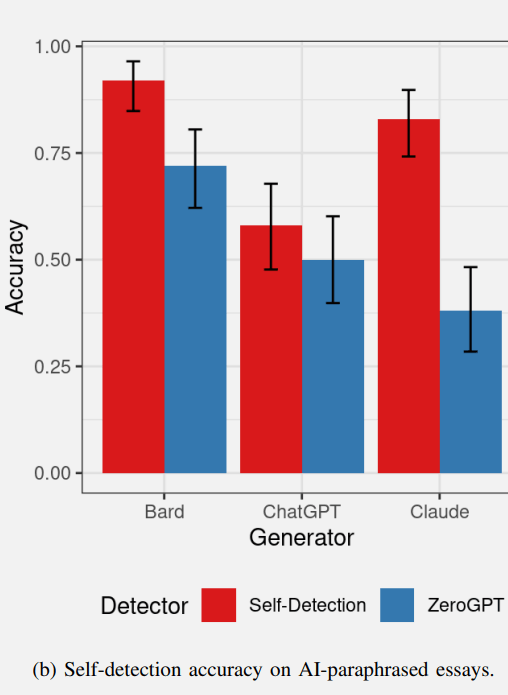

The researchers hypothesized that AI fashions would be capable of self-detect their very own paraphrased textual content as a result of the artifacts which can be created by the mannequin (as detected within the unique essays) also needs to be current within the rewritten textual content.

Nonetheless the researchers acknowledged that the prompts for writing the textual content and paraphrasing are completely different as a result of every rewrite is completely different than the unique textual content which may consequently result in a unique self-detection outcomes for the self-detection of paraphrased textual content.

The outcomes of the self-detection of paraphrased textual content was certainly completely different from the self-detection of the unique essay check.

- Bard was in a position to self-detect the paraphrased content material at the same charge.

- ChatGPT was not in a position to self-detect the paraphrased content material at a charge a lot greater than the 50% charge (which is the same as guessing).

- ZeroGPT efficiency was just like the ends in the earlier check, performing barely worse.

Maybe probably the most fascinating consequence was turned in by Anthropic’s Claude.

Claude was in a position to self-detect the paraphrased content material (however it was not in a position to detect the unique essay within the earlier check).

It’s an fascinating consequence that Claude’s unique essays apparently had so few artifacts to sign that it was AI generated that even Claude was unable to detect it.

But it was in a position to self-detect the paraphrase whereas ZeroGPT couldn’t.

The researchers remarked on this check:

“The discovering that paraphrasing prevents ChatGPT from self-detecting whereas growing Claude’s capability to self-detect may be very fascinating and could also be the results of the internal workings of those two transformer fashions.”

Screenshot of Self-Detection of AI Paraphrased Content material

These exams yielded nearly unpredictable outcomes, significantly with regard to Anthropic’s Claude and this pattern continued with the check of how effectively the AI fashions detected every others content material, which had an fascinating wrinkle.

Outcomes: AI Fashions Detecting Every Different’s Content material

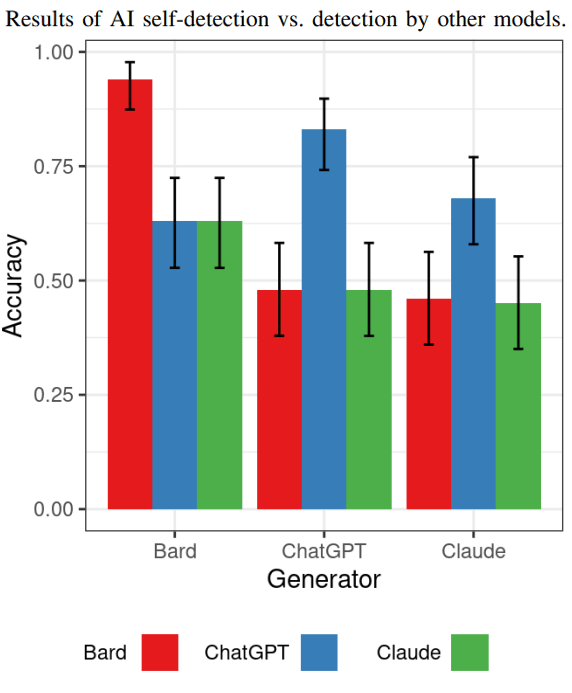

The subsequent check confirmed how effectively every AI mannequin was at detecting the content material generated by the opposite AI fashions.

If it’s true that Bard generates extra artifacts than the opposite fashions, will the opposite fashions be capable of simply detect Bard-generated content material?

The outcomes present that sure, Bard-generated content material is the simplest to detect by the opposite AI fashions.

Concerning detecting ChatGPT generated content material, each Claude and Bard had been unable to detect it as AI-generated (justa as Claude was unable to detect it).

ChatGPT was in a position to detect Claude-generated content material at the next charge than each Bard and Claude however that greater charge was not a lot better than guessing.

The discovering right here is that every one of them weren’t so good at detecting every others content material, which the researchers opined could present that self-detection was a promising space of research.

Right here is the graph that exhibits the outcomes of this particular check:

At this level it ought to be famous that the researchers don’t declare that these outcomes are conclusive about AI detection basically. The main target of the analysis was testing to see if AI fashions may succeed at self-detecting their very own generated content material. The reply is usually sure, they do a greater job at self-detecting however the outcomes are just like what was discovered with ZEROGpt.

The researchers commented:

“Self-detection exhibits related detection energy in comparison with ZeroGPT, however observe that the aim of this research is to not declare that self-detection is superior to different strategies, which might require a big research to match to many state-of-the-art AI content material detection instruments. Right here, we solely examine the fashions’ fundamental capability of self detection.”

Conclusions And Takeaways

The outcomes of the check verify that detecting AI generated content material just isn’t a simple process. Bard is ready to detect its personal content material and paraphrased content material.

ChatGPT can detect its personal content material however works much less effectively on its paraphrased content material.

Claude is the standout as a result of it’s not in a position to reliably self-detect its personal content material however it was in a position to detect the paraphrased content material, which was sort of bizarre and sudden.

Detecting Claude’s unique essays and the paraphrased essays was a problem for ZeroGPT and for the opposite AI fashions.

The researchers famous concerning the Claude outcomes:

“This seemingly inconclusive consequence wants extra consideration since it’s pushed by two conflated causes.

1) The power of the mannequin to create textual content with only a few detectable artifacts. For the reason that aim of those methods is to generate human-like textual content, fewer artifacts which can be more durable to detect means the mannequin will get nearer to that aim.

2) The inherent capability of the mannequin to self-detect may be affected by the used structure, the immediate, and the utilized fine-tuning.”

The researchers had this additional commentary about Claude:

“Solely Claude can’t be detected. This means that Claude may produce fewer detectable artifacts than the opposite fashions.

The detection charge of self-detection follows the identical pattern, indicating that Claude creates textual content with fewer artifacts, making it more durable to tell apart from human writing”.

However after all, the bizarre half is that Claude was additionally unable to self-detect its personal unique content material, not like the opposite two fashions which had the next success charge.

The researchers indicated that self-detection stays an fascinating space for continued analysis and suggest that additional research can concentrate on bigger datasets with a larger range of AI-generated textual content, check extra AI fashions, a comparability with extra AI detectors and lastly they prompt learning how immediate engineering could affect detection ranges.

Learn the unique analysis paper and the summary right here:

AI Content Self-Detection for Transformer-based Large Language Models

Featured Picture by Shutterstock/SObeR 9426

[ad_2]

Source link