What it is and why you should care about it • Yoast

- AppDigital MarketingNewsSoftware

- May 17, 2023

- No Comment

- 208

[ad_1]

Bots have turn into an integral a part of the digital area at present. They assist us order groceries, play music on our Slack channel, and pay our colleagues again for the scrumptious smoothies they purchased us. Bots additionally populate the web to hold out the features they’re designed for. However what does this imply for web site homeowners? And (maybe extra importantly) what does this imply for the surroundings? Learn on to search out out what you want to find out about bot visitors and why you must care about it!

What’s a bot?

Let’s begin with the fundamentals: A bot is a software program software designed to carry out automated duties over the web. Bots can imitate and even exchange the habits of an actual consumer. They’re superb at executing repetitive and mundane duties. They’re additionally swift and environment friendly, which makes them an ideal selection if you want to do one thing on a big scale.

What’s bot visitors?

Bot visitors refers to any non-human visitors to a web site or app. Which is a really regular factor on the web. For those who personal a web site, it’s very doubtless that you simply’ve been visited by a bot. As a matter of reality, bot visitors accounts for almost 30% of all web visitors in the meanwhile.

Is bot visitors dangerous?

You’ve in all probability heard that bot visitors is dangerous on your website. And in lots of instances, that’s true. However there are good and legit bots too. It depends upon the aim of the bots and the intention of their creators. Some bots are important for working digital providers like engines like google or private assistants. Nonetheless, some bots wish to brute-force their method into your web site and steal delicate data. So, which bots are ‘good’ and which of them are ‘dangerous’? Let’s dive a bit deeper into this matter.

The ‘good’ bots

‘Good’ bots carry out duties that don’t trigger hurt to your web site or server. They announce themselves and allow you to know what they do in your web site. The most well-liked ‘good’ bots are search engine crawlers. With out crawlers visiting your web site to find content material, engines like google don’t have any option to serve you data whenever you’re trying to find one thing. So once we speak about ‘good’ bot visitors, we’re speaking about these bots.

Apart from search engine crawlers, another good web bots embody:

- search engine optimisation crawlers: For those who’re within the search engine optimisation area, you’ve in all probability used instruments like Semrush or Ahrefs to do key phrase analysis or achieve perception into rivals. For these instruments to serve you data, additionally they have to ship out bots to crawl the net and collect information.

- Business bots: Business corporations ship these bots to crawl the net to collect data. As an example, analysis corporations use them to observe information available on the market; advert networks want them to observe and optimize show advertisements; ‘coupon’ web sites collect low cost codes and gross sales packages to serve customers on their web sites.

- Website-monitoring bots: They show you how to monitor your web site’s uptime and different metrics. They periodically test and report information, reminiscent of your server standing and uptime length. This lets you take motion when one thing’s improper together with your website.

- Feed/aggregator bots: They accumulate and mix newsworthy content material to ship to your website guests or electronic mail subscribers.

The ‘dangerous’ bots

‘Unhealthy’ bots are created with malicious intentions in thoughts. You’ve in all probability seen spam bots that spam your web site with nonsense feedback, irrelevant backlinks, and atrocious ads. And possibly you’ve additionally heard of bots that take individuals’s spots in on-line raffles, or bots that purchase out the great seats in concert events.

It’s attributable to these malicious bots that bot visitors will get a nasty repute, and rightly so. Sadly, a big quantity of dangerous bots populate the web these days.

Listed here are some bots you don’t need in your website:

- E mail scrapers: They harvest electronic mail addresses and ship malicious emails to these contacts.

- Remark spam bots: Spam your web site with feedback and hyperlinks that redirect individuals to a malicious web site. In lots of instances, they spam your web site to promote or to attempt to get backlinks to their websites.

- Scrapers bots: These bots come to your web site and obtain every little thing they will discover. That may embody your textual content, photographs, HTML recordsdata, and even movies. Bot operators will then re-use your content material with out permission.

- Bots for credential stuffing or brute pressure assaults: These bots will attempt to achieve entry to your web site to steal delicate data. They do that by attempting to log in like an actual consumer.

- Botnet, zombie computer systems: They’re networks of contaminated gadgets used to carry out DDoS assaults. DDoS stands for distributed denial-of-service. Throughout a DDoS assault, the attacker makes use of such a community of gadgets to flood a web site with bot visitors. This overwhelms your net server with requests, leading to a gradual or unusable web site.

- Stock and ticket bots: They go to web sites to purchase up tickets for leisure occasions or to bulk buy newly-released merchandise. Brokers use them to resell tickets or merchandise at a better value to make income.

Why you must care about bot visitors

Now that you simply’ve acquired some data about bot visitors, let’s speak about why you must care.

On your web site efficiency

Malicious bot visitors strains your net server and typically even overloads it. These bots take up your server bandwidth with their requests, making your web site gradual or totally inaccessible in case of a DDoS assault. Within the meantime, you may need misplaced visitors and gross sales to different rivals.

As well as, malicious bots disguise themselves as common human visitors, so they may not be seen whenever you test your web site statistics. The outcome? You may see random spikes in visitors however don’t perceive why. Or, you is likely to be confused as to why you obtain visitors however no conversion. As you possibly can think about, this will probably harm your small business selections since you don’t have the proper information.

On your website safety

Malicious bots are additionally dangerous on your website’s safety. They may attempt to brute pressure their method into your web site utilizing numerous username/password combos, or search out weak entry factors and report back to their operators. When you’ve got safety vulnerabilities, these malicious gamers may even try to put in viruses in your web site and unfold these to your customers. And in case you personal an internet retailer, you’ll have to handle delicate data like bank card particulars that hackers would like to steal.

For the surroundings

Do you know that bot visitors affects the environment? When a bot visits your website, it makes an HTTP request to your server asking for data. Your server wants to reply, then return the required data. Every time this occurs, your server should spend a small quantity of power to finish the request. Now, contemplate what number of bots there are on the web. You may in all probability think about that the quantity of power spent on bot visitors is huge!

On this sense, it doesn’t matter if an excellent or dangerous bot visits your website. The method continues to be the identical. Each use power to carry out their duties, and each have penalties on the surroundings.

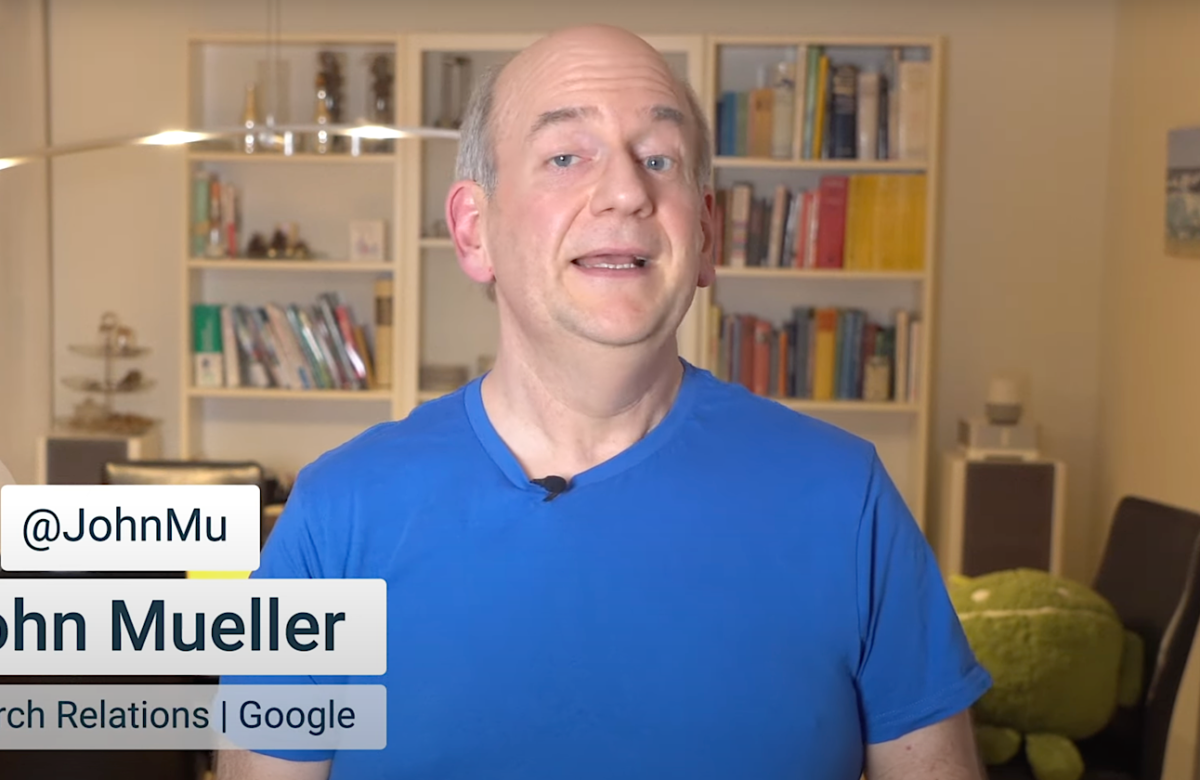

Despite the fact that engines like google are a vital a part of the web, they’re responsible of being wasteful too. They’ll go to your website too many occasions, and never even decide up the precise adjustments. We advocate checking your server log to see what number of occasions crawlers and bots go to your website. Moreover, there’s a crawl stats report in Google Search Console that additionally tells you what number of occasions Google crawls your website. You is likely to be stunned by some numbers there.

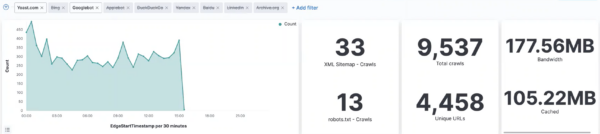

A small case research from Yoast

Let’s take Yoast, for example. On any given day, Google crawlers can go to our web site 10,000 occasions. It may appear affordable to go to us quite a bit, however they solely crawl 4,500 distinctive URLs. Which means power was used on crawling the duplicate URLs time and again. Despite the fact that we repeatedly publish and replace our web site content material, we in all probability don’t want all these crawls. These crawls aren’t only for pages; crawlers additionally undergo our photographs, CSS, JavaScript, and many others.

However that’s not all. Google bots aren’t the one ones visiting us. There are bots from different engines like google, digital providers, and even dangerous bots too. Such pointless bot visitors strains our web site server and wastes power that might in any other case be used for different invaluable actions.

What are you able to do in opposition to ‘dangerous’ bots?

You may attempt to detect dangerous bots and block them from getting into your website. This can prevent quite a lot of bandwidth and cut back pressure in your server, which in flip helps to avoid wasting power. Essentially the most primary method to do that is to dam a person or a whole vary of IP addresses. It is best to block an IP deal with in case you establish irregular visitors from that supply. This method works, nevertheless it’s labor-intensive and time-consuming.

Alternatively, you should use a bot administration resolution from suppliers like Cloudflare. These corporations have an in depth database of excellent and dangerous bots. In addition they use AI and machine studying to detect malicious bots, and block them earlier than they will trigger hurt to your website.

Safety plugins

Moreover, you must set up a safety plugin in case you’re working a WordPress web site. A number of the extra widespread safety plugins (like Sucuri Security or Wordfence) are maintained by corporations that make use of safety researchers who monitor and patch points. Some safety plugins routinely block particular ‘dangerous’ bots for you. Others allow you to see the place uncommon visitors comes from, then allow you to determine easy methods to take care of that visitors.

What in regards to the ‘good’ bots?

As we talked about earlier, ‘good’ bots are good as a result of they’re important and clear in what they do. However they will nonetheless devour quite a lot of power. To not point out, these bots may not even be useful for you. Despite the fact that what they do is taken into account ‘good’, they may nonetheless be disadvantageous to your web site and the surroundings. So, what are you able to do for the great bots?

1. Block them in the event that they’re not helpful

You must determine whether or not or not you need these ‘good’ bots to crawl your website. Does them crawling your website profit you? Extra particularly: Does them crawling your website profit you greater than the price to your servers, their servers, and the surroundings?

Let’s take search engine bots, for example. Google shouldn’t be the one search engine on the market. It’s most definitely that crawlers from different engines like google have visited you as effectively. What if a search engine has crawled your website 500 occasions at present, whereas solely bringing you ten guests? Is that also helpful? If that is so, you must contemplate blocking them, because you don’t get a lot worth from this search engine anyway.

2. Restrict the crawl fee

If bots assist the crawl-delay in robots.txt, you must attempt to restrict their crawl fee. This manner, they received’t come again each 20 seconds to crawl the identical hyperlinks time and again. As a result of let’s be trustworthy, you in all probability don’t replace your web site’s content material 100 occasions on any given day. Even if in case you have a bigger web site.

It is best to play with the crawl fee, and monitor its impact in your web site. Begin with a slight delay, then enhance the quantity whenever you’re certain it doesn’t have unfavorable penalties. Plus, you possibly can assign a selected crawl delay fee for crawlers from totally different sources. Sadly, Google doesn’t assist craw delay, so you possibly can’t use this for Google bots.

3. Assist them crawl extra effectively

There are quite a lot of locations in your web site the place crawlers don’t have any enterprise coming. Your inner search outcomes, for example. That’s why you must block their access via robots.txt. This not solely saves power, but additionally helps to optimize your crawl budget.

Subsequent, you possibly can assist bots crawl your website higher by eradicating pointless hyperlinks that your CMS and plugins routinely create. As an example, WordPress routinely creates an RSS feed on your web site feedback. This RSS feed has a hyperlink, however hardly anyone seems at it anyway, particularly in case you don’t have quite a lot of feedback. Subsequently, the existence of this RSS feed may not carry you any worth. It simply creates one other hyperlink for crawlers to crawl repeatedly, losing power within the course of.

Optimize your web site crawl with Yoast search engine optimisation

Yoast search engine optimisation has a helpful and sustainable new setting: the crawl optimization settings! With over 20 available toggles, you’ll be capable of flip off the pointless issues that WordPress routinely provides to your website. You may see the crawl settings as a option to simply clear up your website of undesirable overhead. For instance, you’ve the choice to wash up the inner website search of your website to stop search engine optimisation spam assaults!

Even in case you’ve solely began utilizing the crawl optimization settings at present, you’re already serving to the surroundings!

Learn extra: SEO basics: What is crawlability? »

[ad_2]

Source link